Edit Mind lets you index your videos (including transcription, frame analysis, and multi-model embedding), and you can search your videos (or specific video scenes) using natural language.

Development Status: Edit Mind is currently in active development and not yet production-ready. Expect incomplete features and occasional bugs. We welcome contributors to help us reach v1.0!

Note: (Edit Mind name is coming from Video Editor Mind, so this will be the editor's second brain and companion in the future)

Click to watch a walkthrough of Edit Mind's core features.

- Search videos by spoken words, objects, faces, etc...

- Runs fully locally, respecting privacy.

- Works on any computer or server with Docker installed.

- Uses AI for rich metadata extraction and semantic search.

- Video Indexing and Processing: A background service watches for new video files and queues them for AI-powered analysis.

- AI-Powered Video Analysis: Extracts metadata like face recognition, transcription, object & text detection, scene analysis, and more.

- Vector-Based Semantic Search: Powerful natural language search capabilities on video content using ChromaDB.

- Dual Interfaces: Access your video library through a Web App.

| Area | Technology |

|---|---|

| Monorepo | pnpm workspaces |

| Containerization | Docker, Docker Compose |

| Web Service | React Router V7, TypeScript, Vite |

| Background Jobs Service | Node.js, Express.js, BullMQ |

| ML Sevice | Python, OpenCV, PyTorch, OpenAI Whisper, Google Gemini or Ollama (Used for NLP) |

| Vector Database | ChromaDB |

| Relational DB | PostgreSQL (via Prisma ORM) |

Edit Mind uses Docker Compose to run everything in containers.

Click to watch a walkthrough of Edit Mind's setup guide.

- Docker Desktop installed and running.

- That's it! Everything else runs in containers.

mkdir edit-mind

cd edit-mindImportant: Before proceeding, configure Docker to access your media folder.

macOS/Windows:

- Open Docker Desktop

- Go to Settings → Resources → File Sharing

- Add the path where your videos are stored (e.g.,

/Users/yourusername/Videos) - Click Apply & Restart

Linux: File sharing is typically enabled by default.

Edit Mind uses a two-file environment configuration:

.env- Your personal configuration (required).env.system- System defaults (required)

Copy the example file and customize it:

curl -L https://raw.githubusercontent.com/IliasHad/edit-mind/refs/heads/main/.env.example -o .env

curl -L https://raw.githubusercontent.com/IliasHad/edit-mind/refs/heads/main/.env.system.example -o .env.system

curl -L https://raw.githubusercontent.com/IliasHad/edit-mind/refs/heads/main/docker-compose.yml -o docker-compose.ymlIf you have NVIDIA GPU, use docker-compose.cuda.yml file instead

curl -L https://raw.githubusercontent.com/IliasHad/edit-mind/refs/heads/main/docker-compose.cuda.yml -o docker-compose.ymlEdit the .env file and configure these critical settings:

# 1. SET YOUR VIDEO FOLDER PATH (REQUIRED)

# Must match the path you added to Docker File Sharing

HOST_MEDIA_PATH="/Users/yourusername/Videos"

# 2. CHOOSE AI MODEL (Pick one option)

# Option A: Use Ollama (more private, requires model download)

USE_OLLAMA_MODEL="true"

OLLAMA_HOST="http://host.docker.internal"

OLLAMA_PORT="11434"

OLLAMA_MODEL="qwen2.5:7b-instruct"

# Please make sure to run ollama server first using this command

# OLLAMA_HOST=0.0.0.0:11434 ollama serve

# and pull the ollama model first

# ollama pull qwen2.5:7b-instruct

# Option B: Use Local Model (more private, requires model download)

# USE_LOCAL_MODEL="true"

# SEARCH_AI_MODEL="/app/models/path/to/.gguf"

# The AI model should be downloaded and saved it to models folder in the project root dir

# Option C: Use Gemini API (requires API key)

USE_LOCAL_MODEL="false"

GEMINI_API_KEY="your-gemini-api-key-from-google-ai-studio"

# 3. GENERATE SECURITY KEYS (REQUIRED)

# Generate with: openssl rand -base64 32

ENCRYPTION_KEY="your-random-32-char-base64-key"

# Generate with: openssl rand -hex 32

SESSION_SECRET="your-random-session-secret"Quick Key Generation:

# Generate ENCRYPTION_KEY

openssl rand -base64 32

# Generate SESSION_SECRET

openssl rand -hex 32Start all services with a single command:

docker compose upOnce all services are running (look for "ready" messages in logs):

- Web App: http://localhost:3745

If you're using Safari, use http://127.0.0.1:3745

- Navigate to the web app at

http://localhost:3745 - Login using

admin@example.comand the password isadmin - Navigate to the web app at

http://localhost:3745/app/settings - Click "Add Folder"

- Select a folder from your

HOST_MEDIA_PATHlocation - Navigate to the folder details page and click on

Rescan - The background job service will automatically start processing your videos and will start watching for new video file events

A huge thank you to the r/selfhosted community on Reddit for their amazing support, valuable feedback, and encouragement.

Original discussion: https://www.reddit.com/r/selfhosted/comments/1ogis3j/i_built_a_selfhosted_alternative_to_googles_video/

We welcome contributions of all kinds! Please read CONTRIBUTING.md for details on our code of conduct and the process for submitting pull requests.

Follow the steps below if you want to extend the app functionality or fix bugs.

git clone https://github.com/iliashad/edit-mind

cd edit-mindcp .env.system.example docker/.env.system

cp .env.example docker/.env.devpnpm install

cd docker

docker-compose -f docker-compose.dev.yml up --build

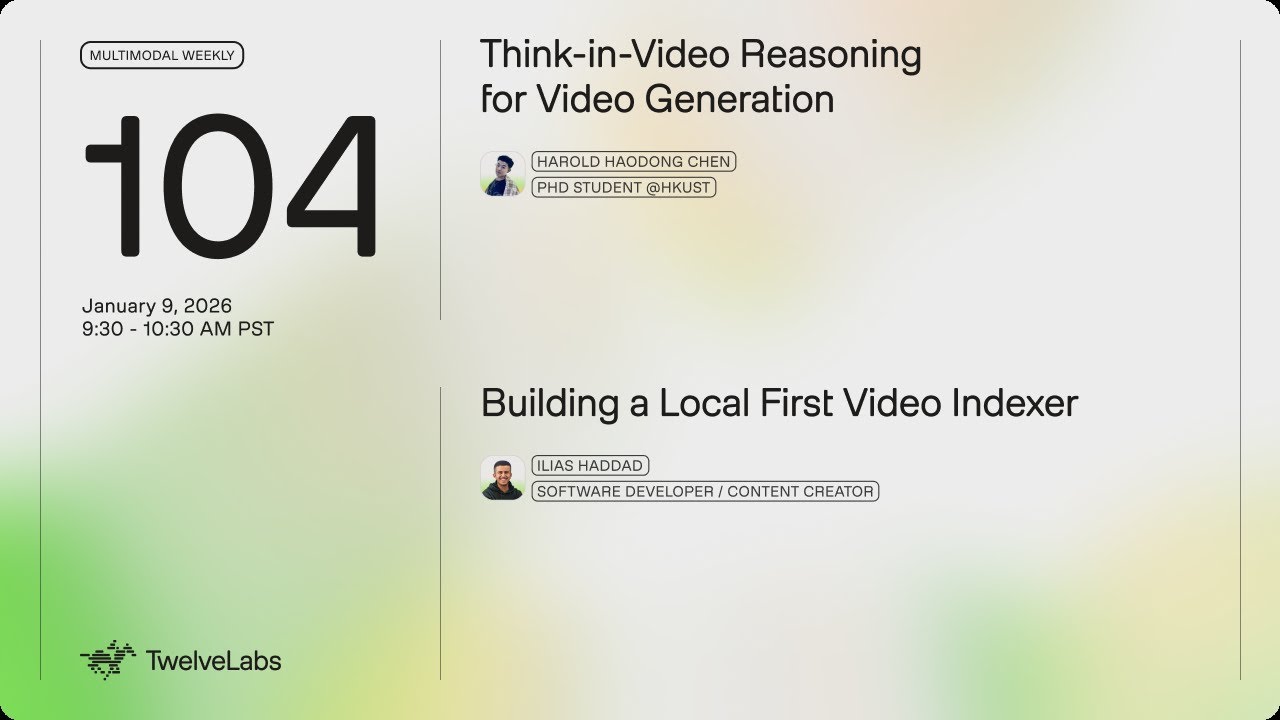

Watch the Edit Mind presentation at Twelve Labs (starts at 21:12)

This project is licensed under the MIT License - see the LICENSE.md file for details.